Controversial Helpers

Artificial intelligence poses new challenges to society. That’s why Freiburg researchers from the fields of neurotechnology, computer science, robotics, law, and philosophy are teaming up for a year to grapple with issues concerning the normative and philosophical foundations of human interaction with artificial intelligence as well as the associated ethical, legal, and social challenges.

Ethical, legal, and social challenges: Freiburg researchers are addressing questions concerning the interaction between humans and artificial intelligence. Photo: Linda Bucklin/Shutterstock

Just under a year ago, Facebook presented a new function: an algorithm designed to identify whether a user is in danger of committing suicide. The “preventive tool,” as it was termed by its developers, combs through posts, videos, and comments made by members for keywords that supposedly signal an intention to commit suicide. When the system discovers a conspicuous post, it informs the platform’s staff, who then contact the family or first aiders in the case of an emergency. The person concerned could therefore receive a knock at the door from the police or paramedics shortly afterward – all because an artificial intelligence calculated a high probability of suicide risk. The algorithm is only in use in the USA today, but Facebook has announced its intention to introduce it worldwide in the future – with the exception of the European Union, likely due to legal complications. “The result of these analyses is a far-reaching violation of personal freedom, and the more specific such predictions become, the more they limit rights,” says Dr. Philipp Kellmeyer.

Who Is Accountable?

The neuroscientist sees Facebook’s algorithm as an example of the challenges posed to society by the development of artificial intelligence. Kellmeyer has teamed up with the computer scientist and robotics specialist Prof. Dr. Wolfram Burgard, the jurist Prof. Dr. Silja Vöneky, and Prof. Dr. Oliver Müller from the University of Freiburg’s Department of Philosophy in a research focus at the Freiburg Institute for Advanced Studies (FRIAS). The team will be grappling for a year with issues concerning the normative and philosophical foundations of human interaction with artificial intelligence (AI) as well as the associated ethical, legal, and social challenges. The research focus “Responsible Artificial Intelligence – Normative Aspects of the Interaction of Humans and Intelligent Systems” will serve as a point of departure for and the core of further projects involving the participation of researchers from the Cluster of Excellence BrainLinks–BrainTools, scientists working at the new Intelligent Machine-Brain Interfacing Technology (IMBIT) research building, and FRIAS international fellows.

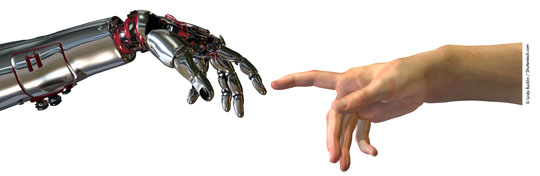

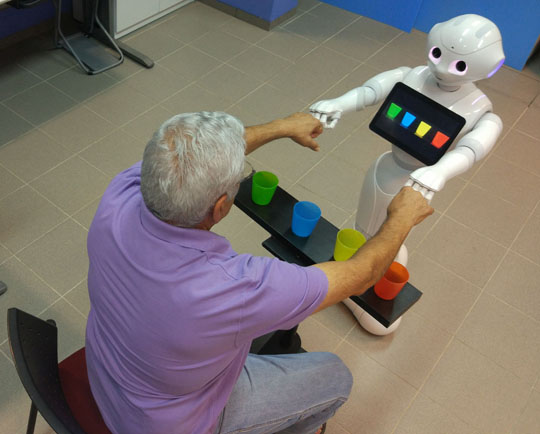

Kellmeyer’s focus in the project is on the use of AI in medicine and neurotechnology: In the future, intelligent assistance systems could suggest diagnoses or treatment strategies, thus supporting doctors in their work. Initial pilot projects are already underway. Intelligent robots could also be used in therapy, for instance as rehabilitation aids for patients who have had a stroke. Moreover, University of Freiburg robotics researchers, neuroscientists, and microsystems engineers are working on so-called human–machine interfaces: They analyze pulses in the brain measured by electrodes and transmit them to a prosthesis. “When we transfer particular abilities to an AI, we have to ask who is accountable for them,” says Kellmeyer. If the system makes a wrong decision, there is no entity that can be called to legal account for it. “At the moral and regulatory level, the question is therefore whether the current technological possibilities or also the available legal instruments are sufficient for the containment of AI as well as for damage limitation.”

Man–machine interfaces analyze brain impulses measured via electrodes and transmit them to a prosthesis. Photo: Martin Völker/AG Ball

Humans as the Final Authority

Up to now, robots have been programmed in such a way that it is possible to determine why they act in a certain way. As with the flight recorders installed in airplanes, explains Kellmeyer, the system logs provide information on the robot’s actions. “If the underlying algorithm that controls the system has a kind of analytical intelligence and the system changes over time, however, it is difficult to predict how it will behave.” There are technical means of putting a check on this loss of control: One possibility under discussion is to have a human serve as the final authority that can interrupt the action of the machine. This principle, in which a person has the possibility to intervene at a critical point in an act ordered by the AI, is called “human-in-the-loop.” It is being studied especially in the context of autonomous weapon systems. “In recent studies, however, a kind of fatigue effect appeared in people tasked with monitoring a system’s recommendations; they no longer questioned the decision but simply gave their approval by pressing a button.” A similar problem could occur in medical recommendation systems, fears Kellmeyer: “The ability of the doctor to scrutinize a decision could also decrease, either because it’s easier to trust the machine or because the doctor, who is incapable of gaining a full overview of the patient’s blood count over the past ten years, is at an analytical disadvantage compared to the machine.”

In addition to a technical solution, people are thus increasingly also calling for a new legal framework and new legal instruments for the use of AI. With regard to brain–computer interfaces, for instance, so-called neurolaws are under discussion. They would make it possible to restrict the lawful uses of systems capable of extracting people’s views and preferences or other personal information from brain data. Plus, they could limit the commercial exploitation of such data by companies. Facebook, for example, is also looking into interfaces enabling the direct translation of brain activity into user input. The company presented its research intentions in this area using the slogan “So what if you could type directly from your brain?” in 2017.

A robot congratulates a stroke patient because he correctly sorted colored cups according to instructions. Photo: Shelly Levy-Tzedek

Legal Protection of Data

For Kellmeyer, the main problem with this is a lack of transparency: “It is wholly unclear what happens with the enormous amounts of data collected in the process. What happens, for example, when sensitive personal information pointing to a disease like epilepsy is hidden in these data?” A conceivable solution might involve special legal protection for data containing information on the state of a person’s health: “In the future, an algorithm that has previously analyzed a person’s posts and browsing patterns and perhaps also recorded how often he or she leaves the house could predict whether that person will soon suffer a depressive episode. However, statistics like this would need to be viewed as biomedical data,” believes Kellmeyer. This term has traditionally included data from medical examinations, such as blood test results or blood pressure readings – but due to the development of AI, this definition could be outdated.

These are questions no discipline can answer on its own, sums up Kellmeyer in view of his interdisciplinary team at FRIAS. “When one takes a look at the possible uses of AI systems, it becomes clear what challenges we are set to face in the coming years.” The goal of the new research focus is thus to address these challenges early on and work toward a solution together with all relevant societal actors, says Kellmeyer, always keeping in mind the central concept of responsibility in dealing with AI. Various disciplines can contribute their expertise to the topic, and there are also potential links for exchange with the public and for questions of political control. “People in the public discourse are developing apocalyptic scenarios revolving around AI at the moment. That is not helpful, in my opinion,” says Kellmeyer. “Nor, however, is the belief that AI systems are the solution to all of our problems. The truth lies somewhere in the middle.”

First published in the Online Magazine research & discover of the University of Freiburg

19/01/23